Mayuri Madhur But what is Machine Learning? : A complete starter pack

Understanding Machine Learning: A Guide for Beginners

Machine learning is an exciting and rapidly evolving field that's attracting considerable attention in the tech community. This blog post will guide beginners through the foundational knowledge of machine learning, the interplay between machine learning and AI, and dive into the main types of machine learning. Whether you're new to the field or seeking a quick refresher, this guide is for you.

What is Machine Learning?

Machine learning is the science and art of programming computers so that they can learn from data. In conventional programming, computers generate output based on rigid rules and data inputs. Machine learning, however, allows machines to learn the rules by discerning patterns between input data and its output. As a result, these rules can differ and react differently based on the data at hand. Simple put, in traditional programming, humans do the thinking; in machine learning, machines do the thinking.

Machine learning becomes particularly useful when dealing with complex situations where manually determining the rules is impractical. For instance, predicting a car resale price based on just the car's age is straightforward; it gets complicated when you factor in additional variables such as the car's condition and odometer reading.

Key Components of Machine Learning

- Data: The most crucial ingredient for machine learning. Previous observations or data serve as the learning material for our machines. The more diverse your data, the better the results. Data can be both manual (higher quality, extensive) and automatic (lesser quality, cheap).

- Features: The attributes or factors that the machine learns from. Choosing the right features dramatically impacts machine learning as some features are more relevant than others. Notably, some features may not be explicitly visible and need to be derived from the dataset.

- Algorithms: These identify relationships in the data and use them to predict results. The choice of algorithm affects model precision, performance, and size.

Machine learning sits at the intersection of data, features, and algorithms.

The Relationship Between Machine Learning, AI, and Deep Learning

Artificial intelligence (AI) is a broad field of study, encompassing several parts, with machine learning being just one of them. Neural networks are a type of machine learning, and deep learning is essentially a modern way of building, training, and using neural networks.

Types of Machine Learning

1. Classical Machine Learning

Classical machine learning includes two main types: Supervised and Unsupervised learning.

- Supervised Learning: The data is labeled, and the learning process is akin to having a teacher. There are two types - Classification (categorizing data based on features) and Regression (forecasting a number rather than a category).

- Unsupervised Learning: The model works independently to discover inherent data information. A popular use of unsupervised learning is clustering, where algorithms find natural clusters in the data.

2. Reinforcement Learning

Reinforcement learning is applicable when there's no data but a simulation environment exists. Here, a system learns from its actions, much like how a baby learns from its environment.

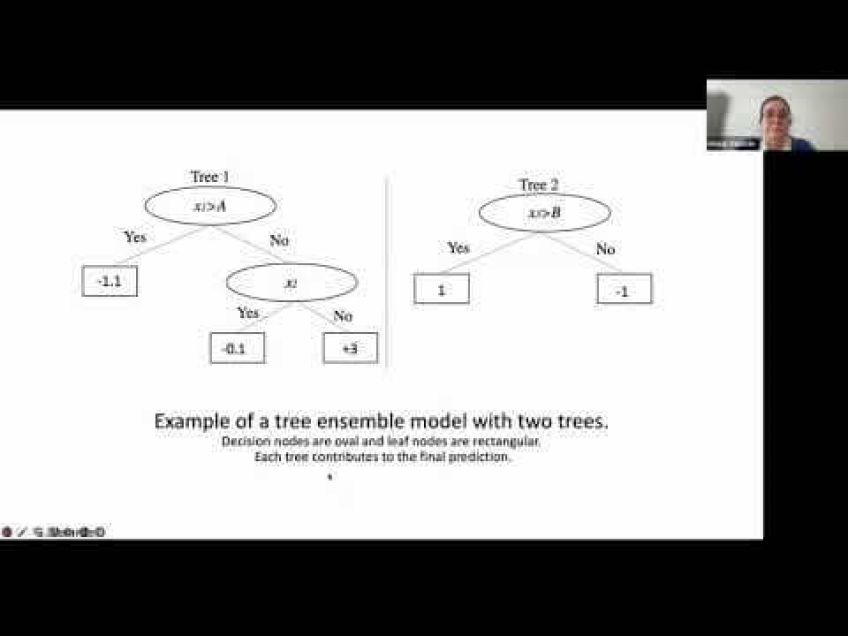

3. Ensemble Methods

Ensemble methods involve combining diverse sets of learners (individual models) to improve stability and predictive power. The principle is that a group of weak learners forms a strong learner, thereby increasing the model's accuracy. Stacking, Bagging, and Boosting are popular ensemble methods.

4. Neural Networks

A neural network is a computing system inspired by our brain's workings. They learn through a network of neurons while retaining the information learned along the way. Convolution Neural Networks (CNNs) and Recurrent Networks are popular neural network types.

Dispelling Myths: Is Deep Learning Replacing Machine Learning?

There's a common misconception that deep learning might make machine learning obsolete. However, neural networks (including deep learning) are part of machine learning. Comparing classical machine learning models to neural networks is akin to comparing a loaded truck to a sports car: each has unique advantages and the best choice depends on the specific problem at hand.

If you’re eager to dive into the world of machine learning further, there are countless resources available online to help guide your journey. Dive in, and see where your interest in machine learning takes you!

Video Transcription

Let's get started. Hello and welcome everyone. Um Thank you so much for joining in today. I am a uni mother, a software developer from India. Today we are going to go through the very basic like foundation level information about machine learning.So if you are very new and confused about how to move forward in this domain, you are at the right place and even otherwise it would be a good brush up. So let's get started. So the agenda for today is pretty pretty clear what is machine learning? Why do we need it then some basics around machine learning and its relation with A I and then we'll dive deeper into the details of the types of machine learning. OK. So what is machine learning, machine learning is the science and art of programming computers so that they can learn from the data that is provided to them? And its goal is also pretty clear, predict results based on the incoming data. OK. So that that was what is machine learning, but why do we want machines to learn in the traditional programming? We input the data to the machines, uh machines give us the output based on some rules that we set to it in machine learning. Uh We input the data and the output of it to the machines.

Machines try to find out the relation between the two and figures out the rules based on which the machine is going to make predictions about the up upcoming data uh or incoming data in the later stages. So in the first uh approach, the rules are rigid and same for all kinds of data. In the second approach, the rules are formed out of relationship between the data and the output. So these rules which are formed will be different and act differently on different kinds of data. In the first approach, we do the thinking in the second approach, machine does the thinking. So OK, you might think that uh why can't we just feed the rules and let the machine do the input and output part. Why do we need the machines to learn? What is the need here for that? Let's take a step back. Um You are given this data set uh with the the age of the car and the resale price of the car that you are going to get. Uh So if you resell your brand new car, then you are going to get $20,000. If the age of the car is $19,000.01 year, then you are going to get $19,000.

If the age of the car is two years $18,000.03 years, $17,000 without a second thought, you will be able to tell that at the age of four, that car is going to get you $16,000. But what about this situation? When I add a little more information to this data set, I have added the car's condition and the odometer reading as well. Now, how about now, how uh are, are, uh are we going to be able to predict the cost of the car as conveniently now? So there exist a lot of problem statements where we are not able to do a lot of thinking and calculation and that is where machine learning comes into picture. So I hope we don't have any doubts until now. OK. So components of machine learning. So what is the most important component one can think of from the definition data whenever we make a prediction we take into consideration the previous observations which were made the previous data that we have. Hence data is the most important thing for making our machines learn the most diver the more diverse the data. The better is the result. There are two types of data, manual and automatic, manual is of better quality and extensive and automatic data is of lesser quality and cheap Google captures data from us all the time.

Whenever it asks us to identify images for Captcha, it is actually fetching data from us free labor. So the next important feature Uh uh So the next important component is features. They are the factors for a machine to look at. And that is what the machine is learning from. And that is what is trying to uh that is the point from where the machine is uh fetching the data or learning to make the predictions. It is very important to choose the right features because they are the affect the machine learning the most, not all details in the data might be important. We need to consider only the ones which will matter the most while predicting the data or for the learning of the machine. So not all the details will be necessary. That is something we have to keep in our mind. Sometimes the features might not be explicitly visible in data. And then I uh we have to derive those features from our data set. The most obvious component is the algorithm. So it's just how you solve a problem, right uh algorithm identifies the relation in the data and uses these relations to predict the results. It affects the precision performance and the size of the model.

So as you can see that machine learning is at the heart is at the intersection of data features and algorithms. OK. This is super important. Look at this uh picture for once artificial intelligence is a whole field of study, it's like chemistry or biology or physics. It's a whole field of study. Machine learning is a part of it but not all of it A I has other parts as well. Like N LP neural networks similarly are a type of machine learning. They are not the complete machine learning. There are, they are a type of machine learning. They are super important, but they are not the only ones there. And deep learning, deep learning is the modern way of building training and using neural networks. The study of neural networks has been around for a very long time now, but it gained its present day importance and boom with the advancement of the computational powers and the technology in the recent years. So basically, deep learning is the new architecture. So these are the main types of machine learning, classical machine learning reinforcement learning ensembles, neural networks. And uh we are going to go through the details of each one of them. Now, classical machine learning comprises mainly of two types supervised and unsupervised learning, supervised learning is when the data you have is labeled unsupervised learning is when you don't supervise the model, you let the model work on its own to discover uh the information which is uh inherent to the data supervised learning is like having a teacher to teach you.

And the teacher has the data labeled for you. For example, if you point towards the mango and call it as an orange, then the teacher is going to tell you that you're wrong and it's an, it's a mango, it's not an orange. So that is what supervised learning basically means supervised learning is of two types, classification and regression classic telling the student whether they have passed or failed. Whereas for regression models, the result would be the percentage scored by the student classification as its name suggests is basically categorizing the data based on the features or attributes that we have in hand, like classifying emails as spam or not or classifying a patient to be uh cancer uh to be having cancer or not.

Now, we are going to go through a few classification models. This is a simple spam filter uh used until 2010. It uses name based classifier, name based classifier is among the earliest and simplest machine learning models. The earliest models were based on pure statistic formula application on the data. It is based on base theorem, calculating the probability of occurrence of male being spam when the number of good letters and spam letters are as shown in this uh in this uh slide. So the class with higher probability is chosen as the label later, this was not used for uh spam filters because basically hackers became smarter and they started adding more good words uh or more good letters to the email. This was also called Beijing uh poisoning decision tree.

Let's help Daisy here in deciding her weekend plan and oh by the way, she's fully vaccinated so she can go out. Uh so if it is raining. She is going to stay in. If not, she's going to go out. If she's staying in and there is cable signal, she will watch a movie. If not, she can play board games. If she's going out, then if it is too sunny, she could go for movies. If not, then she could go for shopping. This is how a decision tree is made. The machine comes up with such questions to split the data best at each step. The higher the branch, the broader the question decision trees are widely used in highly responsible places like uh diagnostics, me medicine, uh finances. Uh They might not be used purely but their ensemble uh random forest is extremely popular and is faster than new networks support vector machines. The main objective is to segregate the given data in the best possible way. The distance between the either nearest point is known as the margin and the data points which are present uh at the uh farthest distance or on the margin are known as the support vectors. Uh The objective is to select a hyperplane with the mo with the maximum possible margin between the support vectors in the given data set. These are fairly accurate and used to be extremely popular. Uh um until neural networks became so uh easily accessible to us.

And even today, support vector machines find great significance at some uh problem zones. Uh But the algorithm inside is basically of the order oo of N square in terms of time complexity. So it takes a lot of time with uh uh with large data set in comparison to the other um classical models like decision tree or KNN or nave bases progression is basically classification where we forecast a number. Instead of a category examples are car priced by its mileage traffic by time of the day. Um demand volume by the growth of the company regression is perfect when something depends upon time and it's super smooth inside the machine simply tries to draw a line that indicates average correlation. When the line is straight, it is linear regression. When it is curved, it's polynomial regression.

Technically speaking, the model is basically trying to bring out these coefficients in in in the uh equation which will help us minimize the cost function. So simply put the machine is going to come up with the coefficient during the training. These coefficients are the one it's it is going to try to find during the training. OK. A special mention to logistic regression. If this is your hair moment, you're not alone. The first time I came across this fact, I also scratched my head. So you can think of logistic regression as a special case of linear regression where the outcome variable is categorical, where we are using log of odds as dependent variable. In other words, it predicts the probability of occurrence of an event by fitting the data to a login function or basically by putting the threshold to the probability which the regression model had predicted or calculated unsupervised learning was invented a bit later. Uh In 19 nineties, it is used less of less often, but sometimes you just don't have any options. Uh You usually have different groups of users that can be split across a few criteria. These criteria can be as simple as a gender and or as complex as persona or their PP purchase procedure. Unsupervised learning can help you accomplish this task automatically. One of the uns one of the most popular unsupervised learning uh types is clustering.

Clustering algorithms will run through your data and find these natural clusters if they exist for your customers, that might mean one cluster of 30 something artists and the other one of uh millionaires who own dogs, you can typically modify how clusters uh uh are being uh taken in your data.

Like how many clusters do you want? Uh And how many clusters are we looking at? It? Lets you adjust the granularity of these groups. The uh there are the various different types of clustering algorithms which are uh out there. And the most popular one is K means clustering uh clustering your data points into a number K of mutually exclusive clusters. A lot of complexity surrounds around how you're going to pick that data or the right number for K Apple photos and Google photos use more complex clustering.

They are looking for faces in the uh photos to create albums of your friends. Now, the app doesn't know how many friends you have or how do they look, but it's trying to find the common facial features. And that is one of the applications of clustering. Another popular issue is uh uh image compression. When saving the image to PNG, you set the palette to let's say 32 colors, it means clustering will um help you uh find all the reddish pixels, calculate the average red and set it for all the red pixels. So the fewer the colors, the lower the file size and hence more profit. Here, we are trying to illustrate Kines clustering with the help of putting kiosks uh in the best or the optimal way here. The value of K is three. So first of all, you put uh your three centers uh or the K kabab kiosks at random places and then you watch how your buyers choose the nearest one and then you move the kiosk closer to the center of their popularity and you keep doing this un unless you have found the perfect spots for your kebab kiosks.

These are a few other popular unsupervised learning um mm methods. Dimensionality reduction is basically when we try to project our data to a lower dimensional subspace which captures the gist of the data dimensionality reduction has principal component analysis and latent semantic uh uh analysis techniques which are useful for data preprocessing and topic modeling.

You can also find it useful for recommendation systems and collaborative filter. It's barely possible to fully understand this machine abstraction, but it's possible to see some correlation on closer look. Uh some of these uh correlate with the user's age. For example, kids play uh Minecraft and watch cartoons more often and others correlate with the movie genre or user hobbies association rule learning is no rev revolution Businesses have been using it for, for uh gaining more profit for a very long time. Now, it includes all the methods to analyze shopping carts, automate marketing strategies and other event related tasks. When you have a sequence of something and want to find patterns in it, you can try association rule learning. For example, putting diapers next to baby food or peanuts next to beer counter can help the store grow. In terms of the profit earned. In this picture, we can see a robot, it is put in an environment and asked to make a move. When it goes near fire, it is given a penalty. Suppose it goes near to the water tap and closes it to stop wa water wastage and it is rewarded in this way, it learns by its own actions. What is good and what is that? Just like babies in machine learning world? This is called reinforcement learning. So then you don't have any data, but you do have a simulation environment. That's when reinforcement learning comes into picture. Another example of this kind uh is self driving cars, they are put in a simulating environment.

Uh There uh there, they might use two different approaches, model based. And model three model based means that car needs to memorize a map or its part. But it's pretty outdated approach uh because it's impossible for a self-driving car to learn the complete planet. So in um model free learning, the car doesn't memorize every moment but tries to generalize situation and act rationally when obtaining a maximum uh reward ensemble methods. So in this picture, there are a bun bunch of kids playing touch and tell, they are asked to uh touch an elephant and describe what it is to the them, each of them gives the description of what they touched. Now, individually, they might not be able to guess that it's an elephant. But when we put all their observations together, we'll get the right answer. This is an example of ensemble methods ensemble is the art of combining diverse set of learners, individual models together to improvise on the stability and predictive power of models. The main principle behind the uh ensemble model is that a group of weak learners come together to form a strong learner. Thus increasing the accuracy of the model. Some of the popular ones are random forest and gradient boosting.

They are typically used in object detection and uh search methods. So one of the popular uh ensemble methods is stacking output of several parallel methods. Parallel models is passed as the input to the last one which makes the final decision um but use different algorithms.

Um uh because same algorithm on the same data set won't make any sense. But at the final layer regression is mostly preferred. But more popular than stacking is bagging and boosting. So bagging is boot sting plus aggregating training some classifiers. So what we are going to do here is we are going to generate random uh subsets of the data I said that is given to us and we are going to uh M model or we are going to train the same classifier on these different data sets uh which were randomly generated out of the original data set.

And we are going to take the result as the maximum voter or as the average uh as the final answer as the ensemble answer. And the other pop more popular one is um boosting algorithms are trained one by one sequentially each subsequent one paying most of his attention to the data points that were mis predicted by the previous one. We use subsets of our data. But this time they are not randomly generated. Now in each sub sample, we take a part of the data that the previous algorithm failed to process. Thus, we make a new algorithm learn to fix the errors which were made by the previous one neural networks. A neural network is a class of computing system. Um They are created from a very simple processing um nodes formed into a network. They are inspired by how our brains work. Although it is not as complex as our brains right now. So the basic unit of computation in a neural network is a neuron which is based on the basic computational units of our brains. Any neural network is basically a connect a collection of neurons and connections between them. Neuron is a function with a bunch of inputs. As you can see a bunch of inputs and one output. It takes uh it simply takes all the inputs, does some uh computation on top of it and presents the result at the output.

When the connection is like the channel between these neurons, they have only one parameter, the weight. So during building a neural network, these connections um like the weights of the connections. And these generator functions of the neurons are the things which are being generated. Convolutional neural networks.

They are used to search for objects on photos and in videos, face recognition style transfer, generating and enhancing images, creating effects like slow motion and uh improving image quality. Nowadays CNN S are used in all the cases that involve pictures and videos. So if you have to understand how this works, let's uh do this. Uh we will divide the whole image of the cat into eight cross eight pixel blocks and assign to each a type of dominant line. It can be horizontal, vertical or one of the vertical. Uh one of the dials, it can also be um that several would be highly visible. As you can see, several would be highly visible. This happens and we are not always absolutely confident output would be several tables of sticks. Um which um are in fact the simplest features representing object edges in the image. They are images on their own but built with sticks. And uh we are going to once again uh carve out eight cross eight pixels. Um uh And we are going to do this matching and see if they match together all over again. And we are going to do this for uh several times. So this operation is called new uh is is called convolution. And uh this is what gave the name for the method convolution can be represented as a layer of neural network because each neuron is uh uh can act as any function recurrent networks.

Gave us useful things like uh neural machine translation, um speech recognition and voice synthesis in smart assistants. It is basically about learning through a network of neurons while keeping the information learned till now with it. Because of this internal memory RNN can remember important things about the input that they have received, which allows them to be very precise in predicting what is coming next, which makes them best for sequential data like voice text or music. So this is what the world map of machine w uh machine learning will look like. So, machine learning basically has uh classical learning which has uh supervised and unsupervised learning. Uh And then it has uh ensemble methods which consist of stacking bagging, boosting reinforcement learning is when we don't have the data, but we do have a simulating environment, uh neural networks uh are uh are something inspired from how our brain works. And uh this is what basically uh machine learning is all about. Uh But here are a few things I would like to address. Uh like there are some myths like uh we all have must have often heard uh stuff like is deep learning going to eat away machine learning or something like that on some of the websites.

Uh So now, since we are clear that neural networks are part of machine learning and data, a deep learning is a new way of uh a new architecture for uh neural networks. So when you're comparing a neural network to any of the classical models or even their ensemble, for example, decision tree is the model and that its ensemble is called random forest. And uh if you're comparing any classical model or the ensemble to a neural network, it's like you're comparing a sports car to a loaded truck. So each one of them have their own pros and cons and you have to uh pick for yourself that uh what is it that this is going to do for you like how and which one would be the best for your problem statement? For example, you, if you want your data to be uh you know, if, if your problem statement requires the data to come uh the uh you know the stuff to be real time, then it would be better to go for uh uh random forest than uh going for a neural network. But again, that is uh like, for example, when you open your uh camera phones, camera and it is trying to uh drop boxes around your face. Uh that is something what uh random forest is doing, that is something what ensemble model is doing.

But if your problem is extremely, very complex and your data is extremely, very um unpredictable and very difficult. And that's just what your problem is demanding, then you can go for a book. So that is uh something I wanted to give you as a takeaway. And uh if you're wondering where to get started from, these are some of the good uh resources with which you can get started. And thank you.