Optimizing Machine Learning-Based Detection Systems

Gissel Velarde

Senior Expert Data ScientistOptimizing Machine Learning Models for Fraud Detection: A Deep Dive

In today's connected world, the increase in online transactions has led to an uptick in fraudulent activity. This has made developing robust and efficient fraud detection systems essential. In this blog, we investigate the optimization of machine learning models for fraud detection, using the Vodafone team's methodology as an example.

Why Fraud Detection?

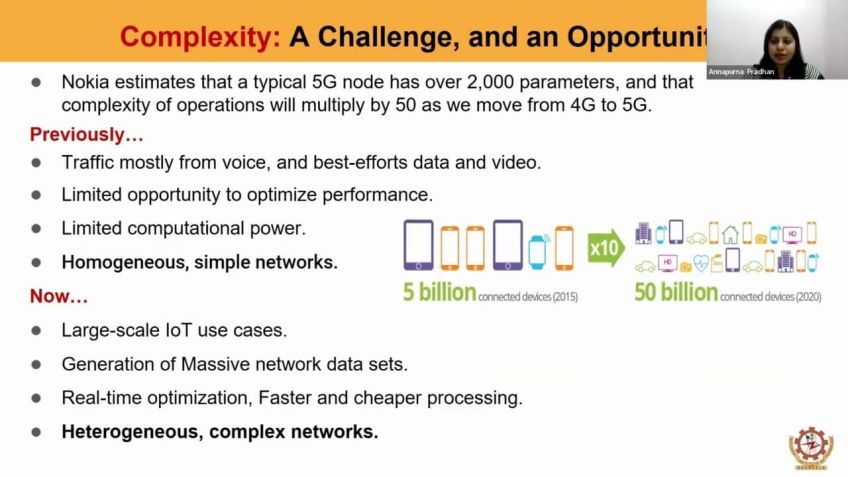

According to a 2021 estimation, global telecom revenue stands at $1.8 trillion, of which over 2% is lost to fraud. Fraud types range from equipment theft to devices reselling. Understanding and implementing effective fraud detection techniques, therefore, is crucial to minimize losses and maximize profitability.

How Machine Learning Can Help

Fraud detection can be seen akin to identifying a real tiger from a fake one. Fraudsters often simulate the behavior of good customers to bypass systems. This is where machine learning can assist. With proper training and fine-tuning, machine learning algorithms can detect even slight deviations from the norm, significantly improving fraud detection rates.

Overcoming Imbalanced Data Challenge

One particular issue that arises in fraud detection is imbalanced data. But this can be alleviated by designing targeted experiments. It can help us understand the correlation between performance of algorithms and boosting systems based on different data amounts and distribution.

The Role of X-Boost

XGBoost (Extreme Gradient Boosting) is an efficient machine learning framework that has proven highly effective for these types of datasets. This algorithm boasts impressive speed and powerful performance, outperforming other ML algorithms in training time and metrics.

Understanding the X-Boost Performance Indicators

We measured X-boost's effectiveness using precision, recall, AUCPR (Area Under the Precision-Recall Curve), and F1 score. These indicators can provide a holistic view of performance, taking both false negatives and false positives into account.

The Impact of Optimal Parameter Tuning on X-Boost

There is a room for improvement in XG-boost's performance by tuning certain parameters, such as max depth, learning rate, the number of estimators, and so on. Our experiments showed that tuning these parameters resulted in improvements, particularly when dealing with large datasets.

Experiments and Results

Our experiments, involving varying data sets and distribution, showed that:

- With a balanced data set (50% negative and 50% positive), performance was excellent, even with a smaller number of samples.

- Performance decreased with fewer positive samples in the dataset. However, with parameter tuning, X-boost still performed exceptionally well even with 5% positives in the data set.

- Even with smaller datasets, the default X-boost performed very well. But, with larger datasets, the tuned X-boost showed marked improvements.

An in-depth review of these experiments and results can be found in our white paper on this subject.

Machine learning, especially ensemble methods such as XGBoost, provide powerful and effective ways to combat fraud. Through thoughtful experimentation and careful optimization, these methods can provide significant value in the effort to minimize fraudulent activities and protect revenue.

If you have any queries or require further information, feel free to reach out to us for further discussions on this topic.

Video Transcription

Hello, everyone and welcome to this presentation. Thank you for uh being here with me. I'm going to present how to optimize machine learning models. Uh Basically, we did this for fraud detection systems. Uh I'm working at Vodafone and this is the diverse team where I'm working with.

Uh Michael Beard is the head of department. Rafael is the product owner. My colleagues uh helped me to um providing um previous implementations and others helped collecting the data. Most of them are also working on data pipelines and some other machine learning projects. This talk is for you if you are interested in fraud detection, how we approach this with machine learning. And also if you care about uh accelerated data science, so I'm going to show how you can program your algorithms also, if you are facing the course of imbalanced data, this is a problem and I was wondering how can we tackle this? Therefore, I designed several experiments to understand better, how good can we perform with our algorithms. And also if you care more about boosting systems uh and how the performance is depending on the data you have. So at the end of the talk, you will better understand how would you can uh use boosting systems depending on your data. So the amount of data and also the distribution and also will share uh actually a white paper that you can uh read all experiments I performed just to motivate why fraud detection is interesting or risk assessment in general. Uh This is an estimation from 2021.

This is the global telecom revenue estimated at $1.8 trillion and loss due to fraud is about more than 2%. Uh Some of the problems that we see are equipment, theft, commissions, fraud and device reselling. There are other types of fraud, of course, but those are perhaps uh the ones that are affecting us, other companies um before we enter into uh how this affects other industries, the the problem of road detection can be seen as trying to recognize if for example, this image is a real tiger or not.

For example, how about the next picture? Can you tell if this is a simulated tiger or a real one? And how about the last one? Is this a real or fake uh tiger? Indeed, the last one is uh a fake tiger. But if you see it from a distance, maybe you will not notice the difference. And indeed, uh customers try to simulate the behavior of good customers, they try to provide uh names, addresses, um even uh DS but everything is just to try to get access to products and services. Why the problem is hard and broad. Stars are continuously changing their behavior.

Once they know how to trick the system, they will uh tweak it or maybe embed other ways uh to change the system and therefore get access to products and services, they may represent rare cases. So in your data distribution, you can have very few cases that are uh positive and this is difficult for machine learning. So that's a challenge. Uh fraud patterns may even uns see your training. This means perhaps there are some behaviors that you really don't capture in training or some uh events will happen in the future that you really don't have access for your training. And other problem that we see is sometimes fraud is identified in a time frame. So it could happen that you have a delay of three months, six months and even nine months to really be sure that someone was doing fraud or it was a bad customer. So you have this problem when you are collecting the labels, which industries are affected by fraud. Of course, telecommunications, finance, e-commerce, automotive, so on and so forth. So any company that is dealing with uh giving customers uh credit or loan and they have to score a risk affected by fraud. In terms of machine learning, we treat this as a binary classification problem and the positive class is of extreme interest for us. The evaluation is very important.

And just to remind you what the confusion matrix is telling us, we for example, have a doctor and this doctor is telling the patient you are pregnant and the patient is indeed pregnant. So this is the case of a true positive. The doctor could uh make a mistake and she could say you're pregnant while uh you are not pregnant while this person is indeed pregnant. So the doctor did not notice that this person is pregnant and this is a type false negative. Another case is when the doctor says you're not pregnant, indeed, this person is not pregnant. This is a true negative and in false positive, uh the doctor is also making a mistake by saying you're pregnant while this person is not pregnant by different applications. The type of mistakes we do are impacting the system in a different way. Sometimes you would prefer to have the less number of false negatives. For example, if you're a doctor trying to identify cancer, is it much better to even if you suspect this, can this customer or this, this person could develop cancer. It is better to send this person to a checkup than uh developing cancer while in other applications. For example, your uh application leaps from good customers and indeed you want to uh create the less number of false positives. So you don't want to flag a good customer as a fraud sub because your business is losing money.

So you need to be very careful on uh what types of mistakes you are more allowed to do or not. This is just the terminology how we uh are going to use. And the problem is to know if your data is balanced or imbalanced because it depends on how you evaluate your algorithms. So the class distribution of the data matters a lot and the evaluation measures behave differently and and the different uh distribution. So if the data is balanced or imbalanced, so therefore, you have to be careful if you have a completely balanced data set and your classifier is making mistakes. This fraction uh for example, is understood as the false negative and the false positives. But in a balanced data set, maybe things look um easier. But if you move to an imbalanced problem, so you have to be careful on how you're evaluating your classifiers. We have taken as key performance indicators, precision recall A O CPR curve instead of a OC. And this curve is usually used by many. But uh you should be careful because it works pretty good when the data is uh completely balanced. But when the data is imbalanced, this measure is really this evening. So you have to be careful. Uh Another measure that summarizes the performance is F one score. And depending on your case, I'm going to talk how to decide which one you should use just as a recap again. Uh It's important to define a baseline.

So you have a precision recall curve and this is the fraction of your positives and you divided by the positives and negatives. So that should be your baseline to start with uh working and precision. Uh You would penalize, for example, uh your model, if you get more false positives, so if false positives increase, your position will go down. And in recall, uh your false negatives are penalizing. Uh So, in this case, uh if the false negatives increase your recall will go down.

So the F measure is just a measure considering both position and recall and depending on your case. So this beta can be selected uh 0.5. If you care more about precision or F two, if you care more about recall accuracy is a measure that you should never use because uh when you have unbalanced data sets, uh this will give you a false indication or it is deceiving. Uh in the white paper, I'm going to share at the end, you will see there are some examples on how this measure is really not a good measure to use. Now we move on to Y extreme gradient boosting or X boost. Uh If you review the literature, there is not that much on X cuss, but in the uh comparisons we have seen this is one of the best performing algorithms. Uh There is empirical evidence because there are several kegel competitions where Xu have been performing the best algorithm and also it's very fast to compute. So you can run many experiments and you will be doing uh very fine with the time just to mention how this works. Uh This is an ensemble of decision trees. In the first pass, the algorithm creates a decision tree with the whole data it has and then it will iterate over an M number of trees.

This number of M you can decide and then it builds a tree, selecting samples that were misclassified by the previous tree. And this is so taking just those misclassified and creating a tree. This is similar, Andrew and G like playing the piano. When you play the piano, maybe you play the piece for the first time and then you say, OK, I have errors in this part and I focus only playing on this part and I until I master it, then I go to the next section, play it again until I master and so on and so forth. So that's the idea of um HT boost and decision trees in a graphical way. Uh I'm presenting here an example of a tree ensemble model with two trees and the decision nodes are and the left nodes are rectangular. Each tree contributes to the final prediction from the literature review. Uh We used this paper which was using API so this is a synthetic data set with nine attributes and 6 million samples. And the authors use uh trend test 75 to 25% with cross validation fivefold. They noticed that um they notice that supervised learning algorithms uh are performing actually best X boost is one of the best ones with the highest F one score. Uh And it's also the fastest, they tested also different unsupervised learning algorithms. And uh actually all unsupervised learning algorithms do not perform as good as supervised learning. Uh They found out that XG board is actually performing really good, but when I test it, you actually require labels.

Uh So it's a combination of supervised and unsupervised and you will need labels to train this X board and it's also very slow. So if you really care about time uh and also uh F one score, then uh we therefore selected X boost. Now, once we select the, the boost, there are different boosting systems. So which one you can use? Uh the authors of the paper um compared H2O spark and X boost. And you can see that X boost is the fastest. Uh When you have here, the comparison, the number of training examples and also the total running time and again, uh the time per iteration in seconds is by X boost, the fastest. So now I would like to tell you about some of the experiments uh I have done for evaluation and I took uh a relatively large data set of 100,000 samples and uh I just took a balance 50%. So 50 negative and 50 positive. Then I took a small subset of this uh data set with just uh 10,000 samples and also 1000 samples. This is a private data set with more than 100 and 50 features. Um And then I created also uh different distributions for, for all of the different uh samples. So we had this 1000, 10,000 and 100,000. I just wanted to know what happens if we reduce the uh positives. So the fraction in this case from 50% 4525 and 5%.

So from a balanced scenario to a highly imbalanced scenario and the pipeline I use uh was to first prepare the numerical data scaling it between zero and one. Although you will see that for X boost, usually you don't need to scale. This was more or uh a practice that I learned from deep learning where if you have images you're always told to scale them. And in X boost, you actually don't really need to scale. But I have seen empirically that when you have a large data set of 100,000 examples, scaling will help you uh with your results. And also for the categorical data, uh it went under under ordinal encoders such that values until during training received a res research value. Why is that? It's because maybe during training, there are some categories like for example, if we have Apple Samsung, uh another type of device and in the future, it appears a new phone that we never seen during training. So this has to have a reserved value when we tested uh vanilla X boost. What you can do is for example, uh import it. So from X boost, import X GB classifier. And uh we selected the objective binary logistic missing. One means uh you can tell X boost that you will have probably uh non values or missing values in your data. This is just for uh reproducibility. And then um oh excuse me. And then uh the evaluation metric is A O CPR as we were discussing.

So that the three method if you want to activate GP U processing uh will be GP. Uh So uh the vanilla parameters are uh over this uh range and why over these parameters. So I was looking uh how one of the uh winning C competition uh submissions was doing and they, they took these parameters. So you have many more parameters but tweak the ones that were selected by this winning competition. So in the right, you see the vanilla parameters of extra boost. And here, for example, uh you will see the max step. This is the depth of the three by the vanilla extra boost. You will have a number of six. And here I tested different values 36, 12 and 20. Uh The learning rate is how fast your algorithm is learning the sub sample. Uh As I was just telling uh X boos can take the whole data set or can take uh smaller chunks of data for training. So this is to prevent overfitting uh call by sample means sometimes some features can be dropped during training. So H will test what happens if uh you're dropping 40% 60% of the, of the uh columns. And the end estimators means how many trees you are building in your uh decision tree. So you can have 100 as it is the vanilla 4000 or 5000. And therefore we created a randomized Tune X WS in comparison to the vanilla. Uh From here, there are several observations.

So uh in the X axis, you see uh the percentage of uh positives. So 50 45 25 and 5%. And in the right side, you see the F score, uh the colors indicate uh this data set. So we have 1,010,000 and 100,000. Uh The picture on the left is for the vanilla and on the right for the uh tune ex boost. What you see is the that when the algorithm is given uh 50 50% even with a small data set, uh its performance is very nice. So a um as you give more data, of course, the performance will improve, that's also expected and uh it will more or less maintain its performance. Of course, it detriments by 25% positives and the largest drop is by 5% positives. Um Another observation that you can uh have from this is um by the smallest data sets. Uh actually tuning X was not consistent, giving better results. So actually the vanilla was working very good. But if you go with the largest data set, then uh tuning your X will bring you improvements. And this is what we see in this next picture. So we have the vanilla and the random search tuned with 100,000 samples. Again, uh you are seeing here the percentage of positive samples and the F score uh in this large data set, you see a small improvement of uh random tune uh X boost. Also most interesting in the more critical range which is 5% of positives.

So um now I think I'm almost at the end of the talk, uh if you scan this picture, you will have access to the white paper where all the experiments are described. And uh also this is my contact information. If you have any questions, I'll be very happy to answer. Is there any question from the crowd? I don't know if I can.