Harnessing the Potential of Unstructured Data to Drive Enterprise Success by Dhivya Nagasubramanian

Dhivya Nagasubramanian

Lead AI Solutions ArchitectReviews

Unlocking the Power of Unstructured Data: A Deep Dive into Audio Insights

In today's data-driven world, the terms "structured" and "unstructured data" are more relevant than ever. But what exactly do these terms mean, and how can businesses leverage unstructured data—particularly audio—to gain a competitive edge? In this article, we'll explore the nature of unstructured data, its significance, and practical applications, with a particular focus on audio data.

What is Unstructured Data?

Structured data refers to easily searchable information typically stored in relational databases, such as:

- Financial records

- Sales data

- Customer information

- Employee records

On the other hand, unstructured data lacks a predefined format and often contains valuable insights. Recent studies suggest that approximately 75% of enterprise data is unstructured.

Examples of unstructured data include:

- Text documents (PDFs, scanned documents)

- Social media posts

- Audio and video files

- Emails and surveys

The Challenges of Unstructured Data

While unstructured data offers rich information, it also presents several challenges:

- Complexity: The lack of a common format makes analysis difficult.

- Volume: Dealing with vast amounts of data requires significant infrastructure.

- Risk of Missing Out (ROMO): Organizations that ignore unstructured data risk falling behind their competitors.

Real-World Applications of Audio Data

Now, let’s delve into some practical applications of unstructured audio data:

1. Identifying Customer Dissatisfaction

Listening to customer feedback through call centers can help isolate the root causes of dissatisfaction, enabling organizations to address issues promptly.

2. Enhancing Quality Assurance

For businesses in regulated industries, analyzing audio data for compliance can improve audit processes and help maintain high-quality standards.

3. Driving Operational Efficiency

Audio data can unveil opportunities for process improvements, leading to operational efficiency.

4. Tailoring Customer Interactions

By understanding customer needs and behaviors, businesses can personalize future interactions and recommend relevant products.

5. Targeted Marketing

Insights derived from audio data can inform marketing strategies, ensuring that messages reach the right audience through appropriate channels.

Leveraging Generative AI for Audio Insights

Organizations can harness generative AI to analyze audio data effectively. Here are a couple of use cases:

Use Case 1: Complaint Management

By implementing AI models, organizations can:

- Classify complaints from customer calls.

- Identify products involved in complaints.

- Utilize AI agents to resolve issues efficiently.

Use Case 2: Quality Auditing

For call quality assessments, generative AI can:

- Generate reports based on audio transcripts.

- Assess agent performance against organizational policies.

Improving Operational Efficiency

Companies can significantly enhance operational efficiency—sometimes by over 70%—by integrating AI solutions for audio analysis. This approach allows businesses to:

- Assess more calls than human agents can handle.

- Minimize human error in data interpretation.

- Accelerate the time to resolution for customer issues.

The Technical Side of Audio Analysis

Cleaning and processing audio data involves several steps:

- Noise Reduction: Removing static and background noise.

- Speaker Diarization: Identifying who spoke when during conversations.

- Automatic Speech Recognition (ASR): Converting audio into text for easier analysis.

Models like OpenAI Whisper have been developed to transcribe speech efficiently, ensuring that the insights drawn from audio data are accurate.

Video Transcription

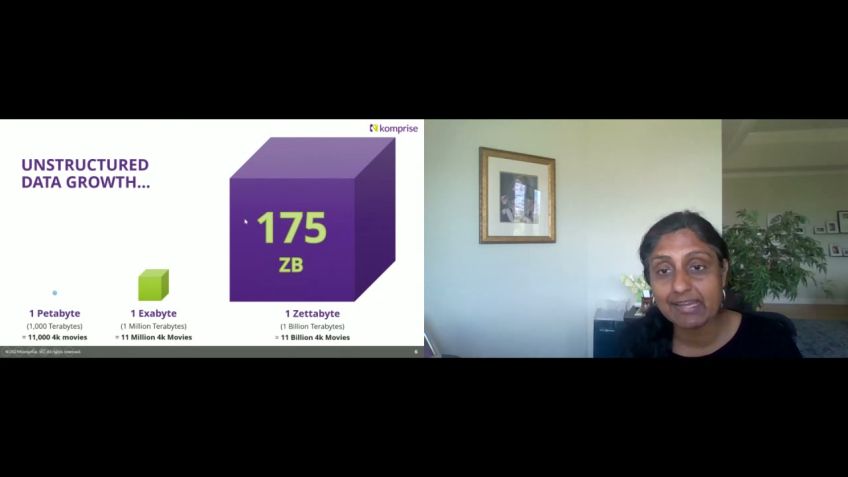

Before we jump onto the topic of unstructured data, what do we really mean by unstructured data, and how is it different from structured?So so structured data, if you can imagine, it's anything that's pretty straightforward, to do a statistical analysis on, like, for example, the numerical and categorical data that we store in the relationship database management system. And few example includes the financial data, the sales figures, the customer information, the employee data, etcetera. And the obvious challenge, that we have if we have to just deal with structured data is the rigidity and the limited scope it it brings in. While on the on the other side, 75% of the enterprise data is unstructured. So we have wealth of information, but it's not tapped completely like how we have for unstructured for structure.

So unstructured data, by definition, it means any data that lacks a predefined format often having rich information, with high real world relevance. For example, an unstructured data, the most common thing we all talk about is the text data, the scanned scanned text documents, PDF documents. But, there are there is more beyond to unstructured data, audio, video, emails, surveys, social media posts, wherever the customer touch points are, where it's a free form of text or audio, video, they are unstructured data as well. And the challenges here is that, like, 70% is great, and we have the latest technology right now, but still there is a challenge that it is highly complex. There is no set format. And, in order to model them, we need a huge infrastructure backing. And, as I said, unstructured data is definitely underutilized, and we are just chatting on how to explore that area so that for the best interest of the organization.

Just like we have the term called FOMO, there's, I invented this term called ROMO, which, this is risk of missing out. So there are two choices, as an enterprise. Right? Like, either you join the enterprise. Right? Like, either you join the bandwagon or or of, applying latest technology to tap into those unstructured data, or you just, you just leave that behind and do the way you're doing. And what's the risk? What's the challenge and what's the risk? The risk outweighs the challenges. So the challenge obvious challenges are the complexity and the variety and the volume of data that we have in terms of unstructured data. But what is the risk of missing it out as the missed insights, the inefficiency, and you will just give up to your compete competitors who are well ahead in the curve of tapping into these unstructured data for their, for their enterprise benefit.

And what are some of the real world applications of unstructured data? And this particular, presentation will just focus on audio as an unstructured data, and we'll go through some of the use cases on how you can leverage some of the audio data for, the enterprise success or to know your customer better. So some of the real world application of unstructured data is, like, identify the root cause of customer dissatisfaction right away. For example, customers leave behind their feedbacks and, their thought process in social media feedback forms, complaint forms, in conversation with the agents, call center agents. So tapping into these resources helps, identify the root cause of your customer dissatisfaction and address it then and there. And also, it also helps identify better quality assurance. Especially if you're coming from a highly regulated industry, you're enforced to actually have the highest quality and do those quality assurance audit time to time and keep the record of those.

So tapping into those unstructured data helps address better quality assurance and also better customer service. And then it also uncovers some of the process improvement that you can tap into, which could be a an opportunity that would help you, come up with the best, operational bin operational efficiency. And, not just that. Like, during these conversations with the customer, during these interactions, it's an opportunity when we tap onto these, unstructured data, you, better understand your customer wants and needs. And then, the during the next interaction, you can be well prepared on what the next product to recommend to the customers. And, also, we can tap into some of those online behaviors that helps determine the right marketing message and the right medium through which you have to market to your customers.

And, as I said, this particular presentation will just focus on, audio as a as a unstructured data. So use case number one, this is just to give you an idea about, like, how you can really tap audio as an unstructured data for your enterprise. Right? Like, you get you have, you interact with their customer through call centers. Right? Like, customers call and are you the customer agent calls the customer, and there's a conversation that happens and that has a wealth of information. Let's say, a customer has a complaint. Right? Like, and the customer calls in and there's a complaint. So that could be an, a generative AI model that could actually classify, whether the given call is a complaint or not and what product it is for and what's the intention.

And is there any regulatory, terminologies being used by the customer or the agent, in that call? And then that can be passed on to a research framework where it could be, it could be a real back office agent who works on those complaints, or it could even be in today's latest development, it could be an age autonomous AI agent, which could actually look into the complaint, understand the complaint, look for different information from different databases, and then, like, come up with a research finding.

And then, the generative AI model could be used to actually generate a response for that complaint, and that could be reviewed or edited by a real human agent who works in the back office. So this is a use case where a complaint's come through an audio call, and it has been categorized and, the right product at the right team has been identified, we can replace an AI agent, to actually look into the complaint and then come up with a resolution, and a human agent actually validate that complaint.

And then, again, an AI agent or a generative AI model generate a response a right response for the complaint, and then a human agent actually approve or edit or update or just discard the message, that was being generated. So another use case here is the agent scoring. Right? Like, you have quality audits. I'm sure all enterprise audit their calls, and, and the agent's behavior and the overall interaction, of that call would be assessed against set of questions. So right now, there are human testers who does it, but, with the generative AI and the latest developments, we can have an, do do the same using generative AI models. So here in this case, the input again is an audio recording, and then you do a certain level of cleanup, on the audio just like how we would do for a text document. Right? And then we would do the speaker separation on who said what and when.

And then, we we can have a generative model that looks up against our policies and procedure documents to in order to, answer the questions, a list of quality question quality audit questions, based on the transcript. So the two inputs to the model would be the transcript, the audio transcript, and the policy documents of the organization. And then the LLM, would be able to generate a response or basically answer those quality audit question along with the proper reasoning to prove the provenance. And, from both the use cases that that we discussed right now, one thing is very clear is that, like, we can improve the operational efficiency and enhance coverage, by using automation. And when I say improve the operational efficiency, what it means is that, like, right now, there are challenges, especially organizations that are big, and even medium sized. Right? Like, you get millions of calls. Even even if it's gonna be, like, a 100,000 calls. Right?

It is humanly impossible for agents, human agent to actually listen into these calls and assist these questions, and there's always a prone it is always prone to human errors. Right? So there's gonna be two challenges, like limited coverage and, it's gonna be a restricted insight. On the other side, benefit of, implementing an AI solution would be like, you can have, you can assess more calls than a human agent could, technically do. So that gives you more, samples on to understand what your customer needs and wants are, what their complaints are about, and it gives, and it also avoids those human error. It does not I don't mean to say that AI does not do make errors. They do as well, but, there are guardrails that you can put in place to actually mitigate that.

And, overall, on an average, by doing this, the operational efficiency gain, can significantly improve by more than 70%. And, also, if you think about the compliance use case, right, like, it can, the time to resolution can be two to three times faster than what it would if, traditionally, if it has to go through a manual route of, like, someone somebody looking into those complaints and then trying to address it.

It also helps, if you come from a regulated industry, it also helps in identifying regulatory breaches, and, you know, trying to be ahead, before it just goes out of your head. And this is just a technical, peep technical slide on how a cleaning and speaker diarization being done that was discussed in use case two. Right? Like, where, we try to understand who said what. So there are layer there are many latest state of art model that are open source that are available for us to use, that's been built by OpenAI and in media, where, audio has been given as an input, and it actually transcribes that speech to text. Right? Now you have a big block of text. But, again, right now, we do not know who said what and when, and that's where speaker diarization comes into picture. As you can see, it clearly separates out that, like, from this time stamp to this time stamp, it's speaker one, and from here to here, it's speaker two.

So it clearly tells you that, like, okay. The agent said this, the customer said this, or whatever the use case might be. Right? Like, this person said this and that person said this. This. So these are few steps, we can do. Right? Like, you have the audio. You clean up for any background noises and you normalize it, and then you detect a voice. Sometimes it could be just white noise, and, as just like text data, this can also have, like, noisy data. And then you do a speaker embedding, and then you do a clustering on, like, okay. This is this belongs to speaker one. This belongs to speaker two based on the, you know, the pitch and the tone.

And then, it can be fed into an automatic speech recognition system, or you can also as well directly pass the audio directly into an ASR, which will just give you a block of text without actually doing the speaker separation. And, this is one of the state of the art model, that's been built by OpenAI called OpenAI Whisper, which, again, uses the underlying transformer architecture that has been trained on 600 k plus hours of multilingual dataset. How it is able to achieve the state of the art transcription is that, like, however long the audio segment be, it actually chunks the audio into thirty seconds and then tries to find out what the language is being spoken predominantly. It comes up with a probability score, and then based on that, it passes the respective language model, and then, it passes into the encoder decoder, which is that's the transformer to transcribe or translate the audio data into text format. And, again, like, any data, be it structured, unstructured, text data, audio data, as well has issues. Right? Like, it's not it's not going to give a free ride. We need to do some kind of cleanup before we actually start using that. So what are a few things that we can bump into? Right?

Messy data, meaning, like, that could be a static noise. Whenever we are on a call, we often, listen have have that static noise in the background that makes it hard for us, a human, to interpret what the other side per person is talking about. They imagine how it would be for a model to interpret with a static noise. So there are ways techniques to remove that from the from the actual, audio, and that should be done taken care before we process the audio through an ASR. And there could be speaker overlap when when two persons are talking, there's an there's always a high chance that, like, both the person talk at the same time and that could mislead the ASR. And there are ways to actually set separate it out even though there's a speaker overlap.

And code switching, so people who are multilingual, who's, who tend to, like, use their, preferred language in English and together, model the current even the state of the model, current state of the model struggles with it, but this is an active area of research that the research team is working on.

And background noise, again, like, there could be so many background noises, as part of the audio that need that should be handled, and there are a few packages that's readily out available that you can use to remove those background noises from the actual audio. And not but not the least, handling audio data is great, but it needs a huge infrastructure need. And, it approximately cost around 63¢ per call on NVIDIA DGX cluster, assuming each call on an average is, like, nine minutes. So these are few downstream business use cases of, ASR. One is, of course, you can understand the sentiment of your customer, better, by analyzing these audio calls. And then the second thing is, like, you can as a as a organization, you can know how how how ahead you are in your quality assurance, and then take a corrective action if required to be so, much quicker than you realize it very later in the time. And then it can as well use for targeted marketing.

You can know your customer better and then use it for targeted marketing on exactly trying to understand what product what's the next product that the customer might be interested in. It also helps in call summarization sometimes. Call summarizations are required to understand what was the last interaction and how did it look like and what was the reason why the customer called and and addressing some of those complaints. And then identifying the call intent and topic modeling, of course, will help why how frequent this custom customers are calling about and why are they even calling about. Is there something that we can do better to address their need instead of them calling us, so that it leaves a better customer experience? And, all any such projects be not necessarily audio, nonstructured that we deal. Right? Like, it's it's good to take this iterative approach to, to actually scale and succeed.

So one mantra I would leave behind is, like, you have that bigger vision and then you start small. You start small and then scale, and, have this iterative mindset, for AI adoption into your business process where you, first identify the business process, identify this where your data is gonna come from, and how much infrastructure you need, and assist the risk, and then start building it, in a smaller scale.

If you can divide it into smaller segments, divide it, and then implement it, iron production, and then scale, and then repeat this process for rest of the line of businesses. And then you measure and monitor, of course, from time to time as these models could degrade over time as new pattern evolves and new regulation evolves. And future in my road maps and takeaways, that I would leave behind, from this presentation would be, of course, audio is one of the least talked about structured data, and, there are many future aspects, of ASR, where it can help agent, assistant with continuous feedback, voice biometrics, and voice based AI assistance.

And takeaway is, like, there are many, business cases that you can think about, with the help of audio data, and there are many challenges, of course, to overcome. And one strategy that I believe would work is you have that bigger vision and you start small scale and then you repeat.

No comments so far – be the first to share your thoughts!