Build Data Intense Applications using Google Cloud Platform

Pooja Kelgaonkar

Senior Data ArchitectReviews

Building Data-Intensive Applications with Google Cloud Platform

Hello and welcome to our informational session discussing how to build data-intensive applications using Google Cloud Platform (GCP). Today, we'll be exploring the pillars of application design, the available GCP services, as well as several patterns that could be applied using GCP.

About the Speaker

I'm Puja Kakkar, a data professional with 16+ years of experience in the field. Currently, I'm working as a senior architect at Tracks Space and have recently been recognized as a Snowflake Data Superhero, being one of the 72 members worldwide.

Google Cloud: An Overview

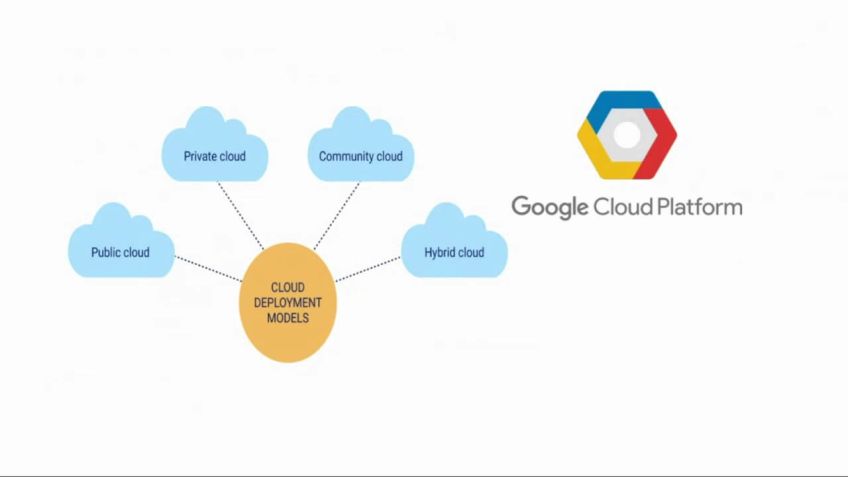

Google Cloud is Google's cloud service offering, providing opportunities in advanced analytics, future development, and fostering an innovation mindset. It offers several advantages when it comes to cloud adoption, such as reliability, scalability, and manageability.

Data-Intensive Application Pillars

The foundation of data system involves three components:

- Reliable: The reliability of your application.

- Scalable: The scalability of your application.

- Maintainable: The level of maintenance, whether it is low or high cost.

The second pillar is Data Models and Query Languages, important in determining how data is stored, retrieved, and queried. This could involve both transactional and analytical data. The third pillar, Distributed Data, considers data replication, partitioning, and the type of transactions run on top of your data platform. Lastly, we have Derived data, which considers how data is processed and the nature of the data in your platform.

How to Approach Application Design with GCP?

GCP supports different approaches to application design; These include Extract-Transform-Load (ETL), Extract-Load-Transform (ELT), and a hybrid approach using both. GCP is rich in data services supporting relational databases, transactional operations, NoSQL, and analytical operations. GCP services can be categorized into various compute offerings, storage offerings, big data, and machine learning/ AutoML.

Implementing Patterns with GCP

Using GCP, you can implement different patterns, such as the ETL pattern through Data Flow and Data Fusion, and the ELT pattern through BigQuery and Data Proc. You also have multiple options for analytical patterns, including predictive analytics, descriptive analytics, automated model training cycle, and AutoML services.

Designing a Data Platform with GCP

You can implement different platform designs using GCP services. These include:

- Data Warehouse: This involves designing a data warehouse using GCP's BigQuery, which can be integrated with machine learning services such as AutoML and Vertex AI.

- Data Lake: In contrast to a data warehouse, a data lake involves storing and maintaining your data at the cloud storage level. Cloud Storage forms the core of the data lake in this design.

- Data Mesh: This involves decentralizing data at a domain level. Each domain would have its own processing and transformation logic.

Data Governance with GCP

Data Governance is crucial to any application design. GCP offers a service called Data Catalog for implementing a technology and business-level data catalog, while Data Fusion helps in implementing data governance.

Conclusion

Building data-intensive applications using Google Cloud Platform involves understanding and applying application design principles and using the breadth of services available on GCP. Whether you're extracting and transforming data, performing predictive analytics or designing a data platform, you'll find that GCP offers reliable, scalable, and efficient services to meet your needs.

Video Transcription

Hello everyone. Good morning. Welcome to the session. Thanks for joining. So this session we are going to, we are going to talk about building data intense applications with Google cloud platform. Um Before we start with the session, uh quick, quickly about myself. So I'm Puja Kakkar.

I have 16 plus years of experience in data domain. I'm currently working as a senior architect with Tracks Space. Um I'm recently been awarded and recognized as a snowflake data superhero. So one of the 72 members across the across the globe. So in today's session, um this is going to be the agenda. We are going to talk about what are the data intense application pillars. So what are the pillars that contributes to the application design and how we can use them to uh design and the application. What are the GCP services available? What are the data application and analytics patterns that we can design with Google cloud platform? What are the various data platform designs like data warehouse data data mesh? How we can do it using uh GCP services? And what is the data governance design model that we can implement and what are the services from GCP can be leveraged to build the uh data governance design. So uh let's get started with the application pillars. So uh when we talk about um Google cloud, so we know it's a cloud offering by Google and when we we talk about the cloud adoption. So these are some of the, some of the key reasons are the uh opportunities because of which we move to cloud. So cloud has uh a lot of opportunities in terms of terms of analytic advanced analytics, future development and the the perception change from how to do what to be developed like of innovation mindset.

The main important thing, let's talk about the uh what are the pillars of the application uh design. So the first pillar is the foundation of data system. So there are three things that three components contribute to the foundation of our data system, uh the reliable, scalable and maintenable applications. So how, how your application is reliable, how scalable it is and how about the maintenance, whether it is a low cost or the high cost maintenance.

The second thing is data models and query languages. So what kind of a databases are they like relation or non relational? How they are queried? And does this support as a QL or no QL models? How the storage and retrieval? So what kind of uh data is being stored and retrieved? Whether they are retrieved by the consumer integration applications or the queries? And what kind of data it is whether it is a transactional data or analytical data. The second important pillar is the uh distributed data.

So what kind of a data distribution is there? How data is being replicated? Is it, is it uh replicated and designed in such a way it is always high available. So talking about disaster recovery and disaster recovery planning, the next one is partitioning how data is being stored across the data platform and partition so that you get performance efficient access to the data systems. Next is a transaction. So what kind of operations being run on top of your data platform are the analytical transactions are the transaction data, whether low level operations are being done, how the data is being scanned? And the last important last and most important thing is the consistency. How consistent your data is whether it a highly consistent strong consistency implemented or do you have eventual consistency? Uh depending on the type of application you have and the type of consumers you have and the the agreement between your application and your consumers, you define the consistency policies.

So uh last one is the derived data. So how your data is being derived and stored and processed in your platform? So once you bring in data, what kind of uh uh nature of the data it is whether it is being uh co considered as a batch, whether it is being considered as a streaming, whether it's even stream or a database streams. And what is the future of your data? Like what kind of analytics is being done? Like considering descriptive prescriptive or a predictive analytics? So this given the of a generative A I. So how what kind of A I models you can build, how your data has been categorized into the A IML integrations and what kind of a predictive analytics you can build? So these are the three important pillars of the data uh intense application design. The first one is the foundation of data system. The second one is the distributed data. And the third one is the derived data. So these are the three important pillars using which we are going to uh talk about the various patterns and how we are going to and design the applications. Let's talk about the approach. So what kind of approach you can implement when we talk about the application design?

So we know the etlalt and the upgrade approaches are there. So these are the three widely used application patterns when we design any of the data applications, whether you you extract the data, transform it on the fly and load it before it reach to the data platform. That the second one is the ext load and transform whether you and uploading your data to the raw layer or the staging layer on the database first on the data platform, and then you run set of transformation jobs to transform your data and have it ready for the analytics to be done.

And the third one is the hybrid where you end up using mix of both ETL and ELT depending on the heterogeneous data sources, you have depending on the integrations available with the data sources and the kind of data you want to process. The next one is the data services. I'm sure most of you are aware of the GCP and data services. I may not spend much time explaining each of these services. But yes, as you know, GCP is reaching data services and there are various uh various services available which supports the relational databases, which supports the transactional operations, which supports the no SQL and analytical operations as well. So there are also various categories available which are formulated and categorized into the type of storages available, type of analytics being run, type of a IML being run and the type of a serving model where which services can be leveraged to integrate with the consumer applications.

So BT R mobile applications or web based applications or any typical set of B I analytical applications where consumer can fetch data from your platform in terms of the reports and the dashboards. Then the next one is uh this is another level of a categorization where we can categorize the GCP services between the various compute offerings, storage offerings, big data and the most importantly the machine learning or the auto ML. So GCP also offer lo lots of auto ML services where it is just a kind of a plug and play. You may not need to have a um you know ML models to be developed to use those auto ML. So these are just a kind of a plug and play depending on your application requirements. Now, let's see how these patterns can be implemented using JCB. So this is a ETL pattern. So if you observe here, data flow is the service that is being used as the ETL tool here, whereas in the various sources uh here uh are integrated and used as a part of a ETL integration. And then there are set of services can be used to storage and analyze the data.

So similar to data flow, data fusion can also be used as the ETL service where you can integrate it with the heterogeneous sources, bring in the data directly from the source or have it landed to the storage layer and read it from there and push it to the data lake or a data warehouse or the ST storage layer depending on your application design and the requirements.

These are the two ETL designs that you can implement using data flow or a data feature on GCP. The second one is the ELT patterns. So how you can design the ELT? So ELT as the name itself says, we have to extract the data first and then transform. So transforming leveraging the services part. So here is the one of the most commonly and widely used architecture that is using the query for ELT implementation. The second one the same you can do it using data proc. So this is the reference architecture for the Hadoop migration use case where you design the ELT pattern using datarock, all your Hadoop spark jobs are converted to run on the FMRL posters on using the uh cloud data block. And these are again kind of ELT pattern where you extract data from the sources, have it on the storage layer and then you read the data from storage process it using its power jobs. And once your data has been processed, you store it yeah, on the storage or you push it to the warehouse or a big table depending on the the type of consumers you have and the accessibility required. So these are the two patterns that um these eyes that we have seen. Etl and ELT. Now let's see what analytical pattern we can implement. So when I talk about data analytics, so there are two types of analytics, right?

So the predictive ones and the descriptive ones where all the dashboards and B I tools fall in place. So this is the example of a predictive analytic where you can implement uh automated model training cycle using vertex A I where the data is being trained based on the new data coming in. So as and when new data comes to the comes to the table. The model gets automatically trained and deployed so that you have a continuous deployment training deployment cycle available. The next one is the looker. So who uh GCP looker is a service that can be used to have uh the keto analytics implemented. You can have dashboards and uh various uh reports made designed and made available to the consumers. The next uh is the auto ML. So auto ML are the services which are used uh widely. If you don't want to have your uh customized models built using vertex E I, you can very well use these models. And some of the widely used models are the N LP, the natural language processing model or the translation models or the region models or text to speech. So depending on the business requirements, you can use these Mr services. Now let's talk. The most important part of the session is the platform designs. So what are the different platform designs that we can implement using GCP services? So the first one is the warehouse platform.

So as you know, the I believe you are very well aware of the data lake, data warehouse, data M and the LA house patterns. So this particular pattern talks about a data warehouse where you design and build a warehouse using JC PB Q. And uh there are various integrations available. So if you see here, these are various sources available and these sources can be in integrated with the big query. And the middle layer talks about the data processing layer where data can be processed in the Bigquery, leveraging the big query native power or leveraging the data flow data fusion or the data pro services. So here it talks about both the pats ELT and E depending on, on the requirements, you can have the warehouse designed to follow either of them or both depending on the type of source you have and the injection integration requirements and then followed up by the machine learning model.

So um as you all know, JCP, bigquery is uh uh one of the flagship service of AJ CP web. It can be integrated with the re uh any other services on the Google cloud platform. So the same way it it can be very well integrated with auto ML or a vertex E I. But along with these two machine learning offerings, Bigquery also have built in native support to the Bigquery ML. So it's uh similar to the DML uh DML statements. So you can create a model using create statements and you can there are specific algorithms supported as a part of a bigquery ML, you can create the models using create model statements, have them trained and deployed on a big query. And you can use simple select statements to invoke those models and have the predictions run. So these are the three ways you can have the machine learning implemented in a warehouse pattern using JCP Bigquery and similar to uh any other consumer integrations, you can have various consumer integrations available either through looker or any of the any of the third party platforms.

Even you can uh integrate it with Tableau Power B I or any of the open source available. So Bigquery has connectors available and you can very well connected uh with the Bigquery for a consumer application design. The next pattern is the data lake pattern. So Data lake is different from the data warehouse where la Data Lake talks about storing and maintaining your data at the uh at the storage level. OK. So even though you have various integrations for your bad streaming, you have your intense data processing, then you still have uh a storage where you, you restore and you store data for your future references and maintain the historical data. The same way you can do it using I in the warehouse as well. But the Lake in the Lake design, if you see it here, the cloud storage is used as the core of the data lake and the data lake is being implemented using a cloud storage. So uh the if you see heat here, the storage is at the center and these are the various sources, source level integrations. There are two types of the processing available stream processing. The stream can be implemented using pub and a data flow and the batch uh can be implemented using various uh loading jobs. All the data can be directly pulled into the source uh to the source buckets on the cloud storage.

And then there are machine learning available using vex A I or the uh data prep and the auto ML available. And the same goes with them uh consumer layers. So this is how you can have the LA design and when we talk about data lake. So even though your data is stored at the storage level, you can have various data mats defined either at a big query or a big table. And the data service is available so that your data is made available to the consumer integrations via bigquery or a big table. Then the next one is the data mesh design. So data mesh as you all know, it, it talks about decentralizing the data at a domain level. So instead of uh putting uh in in a contrary to the warehouse design where you had a data centralized data was at your center and the the consumers were reso uh revolving around your data, right? So unlike to that data m talks about decentralizing the data at a domain level. So if you see this design, there are various domains here defined and these are the producers and these are the consumer layers. So you can have the data segregated at the domain level. And each of the domain would have its own integrations from source to the consumers have own processing and the transformation logic and your consumers would access the data to the uh to your producer or that at a domain level.

So these are uh there are two ways you can have the consumers integrated. So one is through authorized access and authorized views. So uh here, if you see the Bigquery is being used to store all the curated data. And bigquery has a feature of authorized views. It can be defined either authorized views, authorized functions or authorized data set. So if you give access at all authorized data set level, your consumer would be able to access various objects present within the data set and able to access it or you can do it at a view level where you define the target layer views and have the access given as authorized views.

And if you see it here, the consumers are nothing but the various level of a consumer applications through which these data can be accessed. So for example, the reporting is the is a one level uh type of a consumer where data can be uh accessed through B I tools. And as we know any of the B I tool, for example, here we are talking about looker. So it internally runs set of queries to fetch in the data from a big query. So these authorized views can be used to build in these dashboards and data would be faced for through these authorized views. So the underlying data won't be accessible. The consumer layer. The same goes with any of the downstream analytical applications where any of the uh consumer applications running on a, let's say running on data prop or data flow or any other level of a business application, which treat this as a source data, it can be accessed through the authorized views or the data sets.

Then the next one is through the red API. So this may not be the most recommended way to do it. But this is also one of the way where you define the read API S and you give access to the users based on to the uh role based policies. And the access will be given to the specific users to the API S so that they can hit in your bigquery underlying data and process it. So this can be again used at a machine learning applications or the analytical layer application. The next one is the design patterns uh the governance. So this is the most important part of the application design where these five pillars of the governance uh can be implemented. So GCP offers a service, data plates which helps to define a unified data management and a governance control. So you can define uh the policies at a data plates level which can be applied to the warehouses, data lakes or data mats. So and this is the sample uh use case to implement a data catalog. So data catalog can be implemented at a technology level and a business level.

So this is how you can implement a data catalog using data plates and a data governance can be implemented using data plates. So this is all I had uh for today's session. So I'll take a pause here. There is, there is still one more minute remaining. I'll open up for any questions. If you have, please uh feel free to drop in the question in chat or Q in box here. I'll be happy to answer them any questions last few seconds. And thank you so much for joining in the session. I can see one question. So JCP, as I said, JCP is reaching uh data services and solutions. So when I talk about implementing any of the data application, JCP is uh one of the uh hyperscale that I would definitely prefer to use, given, given the various uh support to the various types of data. And the platform integration is like like or warehouse or datames.

No comments so far – be the first to share your thoughts!