Dialog Summarization: Business Cases and Features by Olga Kanishcheva

Reviews

Unraveling the Art of Dialogue Summarization: A Deep Dive into NLP Techniques

Engaging with new-age technology and the overwhelming amount of relaying information, it calls for the much-needed task of efficient text summarization. Natural Language Processing (NLP) plays a vital role in digesting voluminous texts into concise summaries, specifically discussing dialogue summarization in this instance.

About the Expert

A dedicated NLP Engineer with over seven years of experience and a PhD degree in Computer Science, I have not only actively participated in numerous international projects but have also contributed several scientific publications to the field. Currently, working at a software company, I devote my time to solving complex NLP tasks.

In addition to these accomplishments, I also serve as a member of the Special Interest Group on Slavic Natural Language Processing and am an organizer of the International Computational Linguistics and Intelligent Systems conference in Ukraine.

Understanding Text Summarization

The primary aim of text summarization is to identify the pivotal points of information, extract them, and produce the most relevant content for the user in the desired format. These summarization methods can be broadly classified into two groups - extractive and abstractive.

Extractive Methods

These methods endeavor to identify and extract the most significant sentences from the input text. These sentences remain unaltered and are combined to form a summary.

Abstractive Methods

Abstractive methods, on the other hand, produce new text based on the given input text. They generate novel sentences while preserving the overall meaning of the original input. Some popular models in this category include GPT, T5, Bart, etc.

Introduction to Key Techniques

Let's take a brief look at these key, abstractive methods frequently employed in dialogue summarization:

GPT

The GPT - Generative Pre-trained Transformer, consists of a family of models, including GPT, GPT2, and GPT3. These models are trained on the transformer encoder for the task of language modeling. The primary idea here is to predict the subsequent word from the previous context, thereby enabling these models to be used across various NLP tasks.

T5

T5 - Text-to-Text Transfer Transformer, another sequence-to-sequence transformer developed by Google Research, focuses on the problem of restoring gaps in the source text. It is a multi-task model that finds usability in text summarization, translation, question answering, and more.

BART

BART - Bidirectional and Auto-Regressive Transformers is a popular model for text summarization. Developed by Facebook, it is primarily used in the restoration of corrupted text.

Among these, Pegasus - Pre-training with Extracted Gap-sentences for Abstractive Summarization Sequence, is specifically tailored for automatic summarization tasks and is often touted as the best model for text summarization.

Challenges in Dialogue Summarization

Although the methodology and techniques for text summarization advance, dialogue summarization poses its own set of challenges. For instance, in a multi-participant dialogue, key information is usually scattered across different messages from varied participants, leading to a low density of information.

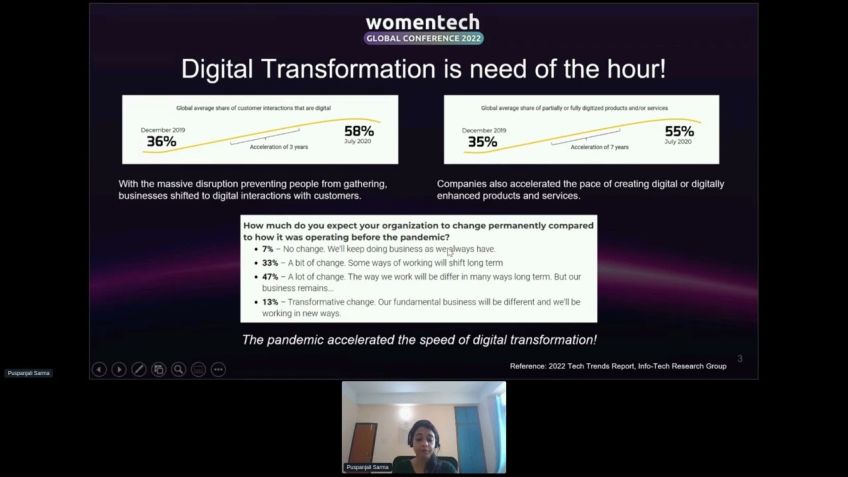

Increasing Demand for Meeting Summarizations

Zoom, Google Meet, and similar platforms have seen a surge in use over the recent past due to the pandemic. Dialogue summarization tools come in especially handy in such settings, helping to extract key points and summaries from meetings and discussions.

Data Sets for Text Summarization

The success of any data science or NLP project heavily relies on the availability of relevant and precise datasets for training. For text summarization, some popular datasets include International Computer Science Institute in Berkeley, AMI Meeting Corpus, SAMSum, MediaSum, and DialogueSum.

A General Approach to Meeting Summarization

While abstractive models based on transformers are unable to work with long input text, a general approach to meeting summarization involves filtering the input text before sending the resultant text to the abstractive methods for summary generation.

In summary, dialogue summarization is an exciting area of technology that offers vast potential. Whether you are new to the field and are looking for reliable models or datasets or an experienced professional looking to delve deeper, the resources mentioned above offer a great starting point.

Hoping that the insights shared in this session have added value and sparked curiosity in you to explore more in this intriguing field of dialogue summarization.

Disclaimer: The success and efficacy of the methods and techniques shared in this article may vary based on the application and usage parameters.

Video Transcription

Uh Hello. Oh, I'm very glad to be here. Mm Very cool team and organizer of this conference. Thank you very much. Um um that you love to be here. Uh Thank you girls, which um which helped me with some questions um uh before conference.Um I have some problems. Um So uh some words about me. Uh I'm an LP engineer uh at um uh C A software uh company. And um you know, I like um different N LP task and I love DN LP area. Uh I have phd degree in computer science and I am after of many scientific publications, um you can write in um mm this publication is some, some of them in the internet or scientifical databases. I have practical experience in real project for more than uh se uh seven years in N LP. And I'm a participant of many international projects. So usually it's a European project. So what about uh what else? Some additional, yes, information about me. Uh I'm a member of the special interest group on Slavic Nature language processing. Also, I'm organizer of international Computational linguistic and intelligent system conference here in Ukraine. And um Now, I think uh we can start with the main topic of this presentation is a dialogue summarization about uh method summarization for this task. Uh some problems, issues, et cetera. Uh OK. Mm Today I want to discuss about the uh summarization task for the dialogues.

Uh The main idea of the text summarization is to take the informational source, uh extract some main contact from it and present the most important content to the user in the considers uh considered uh form in a manner sensitive uh to the users or applications needs all summarization methods.

Uh can be divided into two groups, extractive and abstractive exact methods. Uh try to extract um the most important sentences from the input text. Uh These sentences are not changeable and combined into the summary. Uh Usually it's a statistical approaches, uh abstractive matters, produce new text or summary based on the input text. Uh It's other sentences but receive the meaning, the main meaning of the input text. Uh for the dialogue summarization we're interested in the obstructive methods, the most popular obstructive methods.

Uh Now it's uh learn some apps GP T bar T five PGA, et cetera. You can see on the slide some of them and uh some words about these methods. Uh First is the GP T. Yeah. Uh GP T is a fam uh family of models, GP T GP T two GP T three which are based on the pre trainin of the transformer the quarter for the task of language modeling. Uh This task is to predict the next talking uh from the previous boundaries uh to predict the next text from the left to the right. Uh If you don't know mm about uh information, if you don't know what it is. Transformer architecture, I recommend it uh to read um uh scientifical papers or some uh uh blogs in the internet or medium um and understand what it is because it's a um I think it's transforming it's in usage or for natural language processing. Uh These um models based on transformer uh applied to different NOP task now. And the researcher and uh software developers receive a very good result uh when they use um especially uh transformers uh architecture for their tasks. Um OK. Uh The next uh T five T five is another sequence to sequence transformer uh globally similar to the bar develop and development by Google research. Uh T five is portrayed on the problem of restoring gaps.

Uh There is a set of um consecutive tokens uh during training gaps in the source text are hidden and the task of the model is generate them predict. Yeah, unlike bart where the entity text is generated T 55 only needs to generate the hidden spaces themselves. Uh T five models can be used not only for the text summarization but also for the other task. It's um um it's a uh multitask model Yeah. For example, for translation. Yeah, for summarization for answer questions as I remember is I correct, remember OK. And um uh the next is the B A RTB A RT uh is the uh one of the most popular model for the text summarization. Um It's a sequence to sequence transformers transformer which is portrayed in the uh restriction of corruption tech a noisy text and development by Facebook. Uh on this light, you can see uh some type of uh corruption, uh text, corruption, token, masking, token dele uh deletion sentence, permutation, document, rotation and text in the and uh mm uh bet um only used as a quarter. Uh GP T only used as the quarter and bar. You uh has the quarter and uh the quarter and quarter and the quarter part and you can see this on the slide also. Mhm And uh um I think it's uh one of the most popular.

Um And the best model for the Jim Organization is Picassos. Um Picasso is a model uh with a pre prolonging tasks uh specially selected uh by uh for automatic summarization. So this model uh works uh especially with the text summarization task uh from an architectural point of view.

Uh This is an ordinary sequence to sequence transformer uh also has an code and the corder parts. Uh But it's instead of um restoring random pieces of text, it's proposed to use the task of uh generating missing sentences. So you need to predict the missing sentences, whole sentences.

It's considering the fact that we selected the most important sentences from the document, replace them with a talking mask uh form a quasi abstract from them and try to generate this quasi abstract. Uh This problem can be solved um simultaneously in tender with the mask language modeling.

Um uh Picasso's Outperforms Bar and T five on all measured data sets. So it's a very good model for the text summarization. OK. Um Go to the dialogue summarization. Um uh uh Notwithstanding the success of a single document summarization. Uh this um methods could be transfer uh transferred to the multi participant dialogue summarization. So we could not use um for example, text run methods for one document. Yes uh for the meeting summarization and receive good results. Uh It's uh I think it's an impossible. Uh Firstly, mm uh What is the problem? Firstly, the key information of the one dialogue is often split it between some messages from different participants uh which lead to low information density uh secondary. Uh the dialogue um contains multiple participants, sometimes topic drifts, uh sometimes frequent uh frequent references, uh domain termino uh terminologists, et cetera and uh all these characteristics uh influence um on this task and the dialogue summarization. It's a very difficult task.

Uh Nowadays, on the slide, you can see the number of dialogue summarization papers publishing over the past five years for each domain. And you can see that uh on this diagram, meeting summarization is the most popular because it's the the most difficult task. Uh But of course, we have a different type of um dialogues, yes, charts, medical dialogue, custom service, emails, emails, et cetera. Um uh The demand of the meeting summarization has especially increased during the Corona epidemic. Uh because many people start to use such software for the Meeting Zoom.

Uh Google meets teams, et cetera and executive methods could not be used for this task because main information contains in the different speeches of participants. It is the uh the main idea. Uh OK. Um In um in the topic of my presentation, I used uh the term dialogue but um of course, uh I have experience not only with dialogue but as uh with meeting uh summarization. And I want to um mention that um I want to notice that um dialogue and meet, it's a very different form of conversation between people um dialogues or podcasts because podcasts, it's also very similar with the dialogue are usually it's a conversation or other form of discourse between two or more individuals.

Uh Usually the whole podcast is dedicated to one idea. So all podcasts has usually has one or two ideas uh presented in the form of dialogue. You can see the example for on this slide uh meetings uh usually have a different structure uh with more than two participants. Uh speeches can usually be interrupted quite unexpectedly, can switch very quickly between uh between from one participants to other topics. Also can uh can switch very quickly. For example, you would take um daily mean meeting each of team present um some results, um some problems, et cetera, sometimes interrupted. And um I think meeting summarization is more difficult than uh for example, podcast or traditional dialogue summarization. Uh So what is the meeting summary?

Yeah, uh is um meeting um summary is a less formal version of meeting me uh minutes. Uh Maybe some of you works with um software which help to create um reports of meetings. Uh And um you understand that it's really difficult task. Uh It's often any email that is sent as a recap or follow up of the meeting or which uh gives a general overview oo of the discussions that was had and service as a remind of the tasks mm that can be assigned to different members of the team by putting action items in this mail or with the employees assigned to the specific responsibility with due dates.

The meeting summary holds each member accountable. Uh your email uh email or meeting summary should also include uh any other important updates or project information as it was uh um coverage in the meeting. Uh on the next slide. Um You can see uh the example of such report.

So in the head of this uh email, um some general information about the me meeting sometimes securing uh date of the meeting, meeting, of name, um etcetera. Uh The next is um um the main ideas or talking points. Yes, about all of these meetings. Uh We don't know how, how many points will be in these meetings. Uh So it's a challenge also. Uh fi uh and finally, we have reports for each participants uh name uh task, which related with this person and date also, for example, this deadline for some task, etcetera. Uh Of course, this um uh template of this um report um depend uh on the domain. Yeah. So for example, for it, company for it, companies meetings. Uh uh It's uh one example for marketing or it's the other. Um On the next slide, you can see the leader board of Charter summarization task on Sam Zoom. Uh Some Zoom is a data set for the text summarization. It can be used for the training and for the evaluation. Uh this table is presented the results of the different summarization methods such as uh extractive, abstractive and sample training models. And uh for the evaluation of uh summarization, usually use um Roj Matic Roche stands um for call oriented understandable for GIN evaluation.

Uh It's uh essentially uh a set of metrics uh for evaluated, automatic automatic summarization of text as well as machine translation. So these metrics uh also use such as for machine translation and for the text summarization. I recommended these papers. Uh This is a good um uh overview of for the tech uh meetings and dialogue summarization. You can find um mhm describe about uh some approaches for the uh dialogue summarization and uh uh results. It's uh a paper 2021. So it's uh not so old. Um OK. And um um I couldn't say about the data because um the data is important part of success in data science. And uh also in N LP uh on this slide, I present some popular data sets for the text summarization meeting, text summarization. Uh First uh is the International Computer Science Institute in Berkeley, California. This meeting corpus uh This corpus contains audio recorded uh simultaneously from heat worn and table talk microphones. Um world level transcripts of meetings uh and various media data on participants, meetings and hardware.

Uh Such a corpus supports work in ed speech recognition, noise robustness, uh dialogue modeling prosody, each transcription information achieve et cetera. The next corpus is a am meeting corpus. Uh is a multimodal um data set consists of um 100 hours of meeting record recordings.

Uh Some of these meetings um contains uh uh nature uh occurring and some are height uh particularly using the scenario in which the participant played different roles in the design team, taking a design project from kick off to complete over the course of a day. Uh Kung consist of more than um over 2000 square summer prayers. Over 232 meetings in uh multiple domains, uh some zoom. Uh I um you know, we just, we um discuss about these data sets um before in my presentation and media zoom. It's a data set um which contains interviews uh zoom screen, uh TV, series transcripts and dialogue zoom. It's an only um new data set uh contains more than 10,000 dialogues with the corresponding manually, labels summaries and also topics. Um I think it's a very um useful because um um very often you need um not only recognize uh some information or create summary. Uh you need to understand uh uh how many topics, what are the topics um presented in this uh meetings, et cetera. So it's a very useful data set. Um And um now we can go to the uh general approach to meeting summarization. Um Some recent works uh works on media summarization, describe such approaches. Uh I tried to create generals for you. Um Because um mm I think um um now it's not important uh which is a matter to be we um we can use on the first stage uh on the, on this approach. OK. Uh uh Now I described uh the general, the general pipeline.

Uh so uh the disadvantages of the uh abstractive models based on transformer uh they could not work with a long input text. So, on the first stage or we need to filter input text at the beginning, uh even the most important sentences um for this, we can use a statistical approach or extractive or obstructive methods uh and filter uh and stay on important sentences. And after this, the resulting text put into the obstructive methods. Yeah, and generate and use summary uh for this um step, uh we can use Bar Picasso or something else. So it's a general uh general approach uh OK. And um uh on this slide uh show the example of a similar approach getting meeting summary at the first stage uh after uh used uh uh be uh for the filter of sentences and for the um summer generation, they use Picasso's model. So you can combine different models and receive good uh good results. Um More information about this approach. Uh You can find on the link below all this uh image on the slide. OK. Um So, uh if you're interested in uh text summarization or dialogue or meeting summarization, uh but you um don't know how to start with this um task. You can find a lot of models and uh data sets for the text summarization on the hug and face resource.

I recommended to use this resource. Um If you um start to work the uh NOP or data science, uh you can find um models uh for uh for English or for multi-language uh task. So you can, you can find all necessary on this site and uh uh try to do some experiments. Uh OK. Uh Thank you very much. And uh if you have questions. Uh I'm ready. Thank you uh about the slav. Yes, of course. Um Now, uh on the hug and face also, you can find uh some model for the um s some Slavic languages for the Poland or for the Ukrainian languages also. And uh of course, maybe mm the cur now not so good, but you can uh pre trainin for your task and um to do this model more better as in previous. Uh Thank you very much.

No comments so far – be the first to share your thoughts!